Large Language Model Performance In Medical Education: A Comparative Study On Urinary System Histology

Welcome to your ultimate source for breaking news, trending updates, and in-depth stories from around the world. Whether it's politics, technology, entertainment, sports, or lifestyle, we bring you real-time updates that keep you informed and ahead of the curve.

Our team works tirelessly to ensure you never miss a moment. From the latest developments in global events to the most talked-about topics on social media, our news platform is designed to deliver accurate and timely information, all in one place.

Stay in the know and join thousands of readers who trust us for reliable, up-to-date content. Explore our expertly curated articles and dive deeper into the stories that matter to you. Visit Best Website now and be part of the conversation. Don't miss out on the headlines that shape our world!

Table of Contents

Large Language Model Performance in Medical Education: A Comparative Study on Urinary System Histology

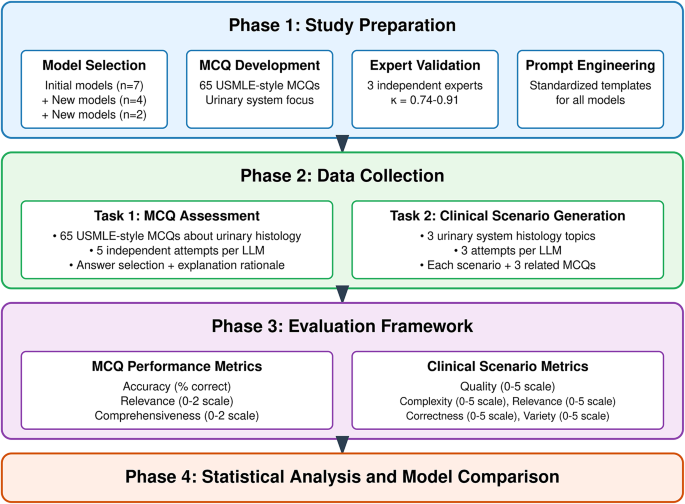

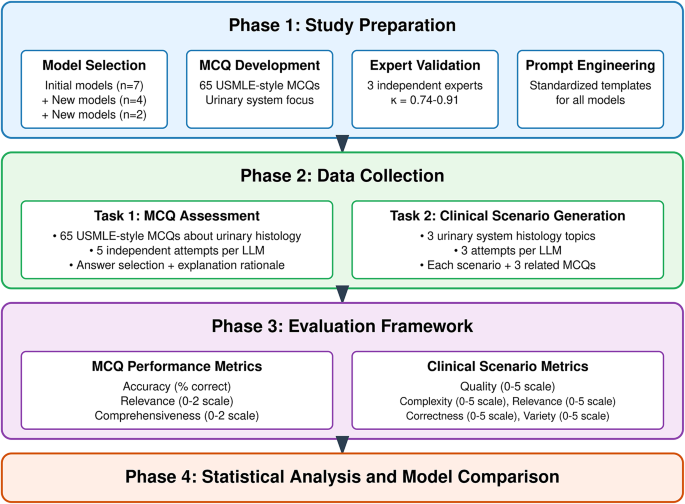

Introduction: The integration of Artificial Intelligence (AI) in medical education is rapidly evolving. Large Language Models (LLMs), like GPT-3 and others, offer exciting possibilities for enhancing learning experiences. However, their accuracy and effectiveness in specific medical fields remain under scrutiny. This article reports on a comparative study assessing the performance of several LLMs in answering complex questions related to urinary system histology, a crucial topic in medical education. The results highlight both the potential and limitations of using LLMs as educational tools.

The Study: Testing LLMs on Urinary System Histology

Our research focused on evaluating the ability of leading LLMs to accurately and comprehensively answer questions concerning the microscopic anatomy of the urinary system. This includes detailed knowledge of the nephron, glomerulus, Bowman's capsule, renal tubules, collecting ducts, ureters, bladder, and urethra. We posed a series of increasingly complex questions, ranging from basic definitions to nuanced comparisons of histological features and pathological conditions. The LLMs included in the study were:

- GPT-3 (OpenAI): A widely recognized and powerful LLM.

- LaMDA (Google): Known for its conversational abilities.

- Bloom (BigScience): A multilingual LLM.

Each LLM was provided with the same set of questions and their responses were evaluated based on several criteria:

- Accuracy: Correctness of the factual information provided.

- Completeness: Thoroughness of the explanation and inclusion of relevant details.

- Clarity: Ease of understanding and readability of the response.

- Conciseness: Avoidance of unnecessary or redundant information.

Results: A Mixed Bag of Successes and Shortcomings

The results revealed a mixed performance across the different LLMs. While all models demonstrated a basic understanding of urinary system histology, significant variations existed in their ability to handle complex questions.

- Strengths: LLMs excelled at providing definitions and basic descriptions of structures. They also performed reasonably well in comparing and contrasting different components of the urinary system. The ability to generate detailed, structured answers was a major advantage.

- Weaknesses: Several limitations were identified. The LLMs struggled with nuanced questions requiring a deep understanding of pathophysiology or complex interactions between different structures. In several instances, responses contained inaccuracies or omissions, highlighting the importance of human oversight. Furthermore, the models sometimes generated overly verbose responses, obscuring crucial information.

Implications for Medical Education: A Cautious Optimism

This study demonstrates that LLMs possess significant potential as supplementary tools in medical education, particularly for providing readily accessible information and aiding in knowledge consolidation. However, it is crucial to acknowledge their limitations. LLMs should not replace human instructors or established learning materials. Instead, they should be viewed as valuable resources that can enhance, but not substitute, traditional teaching methods. Further research is needed to refine the capabilities of LLMs and develop strategies for their effective integration into medical curricula.

Future Directions: Refining LLM Performance in Medical Education

Future research should focus on:

- Fine-tuning LLMs: Training LLMs on specialized medical datasets to improve their accuracy and understanding of complex concepts.

- Developing robust evaluation metrics: Creating more sophisticated methods for assessing the performance of LLMs in medical education.

- Exploring interactive learning applications: Integrating LLMs into interactive learning platforms to foster deeper engagement and knowledge retention.

Conclusion: A Powerful Tool, But Not a Replacement

Large Language Models offer a promising avenue for advancing medical education. Their capacity to deliver readily available, detailed information is undeniable. However, careful consideration must be given to their limitations, particularly the need for human oversight and the importance of maintaining traditional teaching methods. The integration of LLMs into medical education should be approached strategically, focusing on enhancing, rather than replacing, existing pedagogical approaches. The future of medical education likely involves a hybrid approach, leveraging the strengths of both human instructors and AI-powered tools.

Thank you for visiting our website, your trusted source for the latest updates and in-depth coverage on Large Language Model Performance In Medical Education: A Comparative Study On Urinary System Histology. We're committed to keeping you informed with timely and accurate information to meet your curiosity and needs.

If you have any questions, suggestions, or feedback, we'd love to hear from you. Your insights are valuable to us and help us improve to serve you better. Feel free to reach out through our contact page.

Don't forget to bookmark our website and check back regularly for the latest headlines and trending topics. See you next time, and thank you for being part of our growing community!

Featured Posts

-

Us Open Controversy Jelena Ostapenkos Apology To Taylor Townsend Explained

Sep 01, 2025

Us Open Controversy Jelena Ostapenkos Apology To Taylor Townsend Explained

Sep 01, 2025 -

Post Game Analysis De Boer On Alabamas Setback And Collective Responsibility

Sep 01, 2025

Post Game Analysis De Boer On Alabamas Setback And Collective Responsibility

Sep 01, 2025 -

Ostapenko Issues Apology To Townsend Following Controversial Remarks

Sep 01, 2025

Ostapenko Issues Apology To Townsend Following Controversial Remarks

Sep 01, 2025 -

Christian Pulisic Scores For Ac Milan Before Usmnt Duty

Sep 01, 2025

Christian Pulisic Scores For Ac Milan Before Usmnt Duty

Sep 01, 2025 -

Nba Debate Patrick Beverley Ranks Kevin Durant Above Larry Bird

Sep 01, 2025

Nba Debate Patrick Beverley Ranks Kevin Durant Above Larry Bird

Sep 01, 2025

Aia Singapore Invests In Talent Development Through Partnership With Singapore Airlines Academy

Aia Singapore Invests In Talent Development Through Partnership With Singapore Airlines Academy

College Football Michigans Underwood Impresses In Win Against New Mexico

College Football Michigans Underwood Impresses In Win Against New Mexico